Information theory and Coding basics

Information theory

Information theory is theoretical part of communication developed by American mathematician Shannon. It deals with mathematical modelling and analysis of a communication system. It addresses and answers two fundamental questions (among others) of communication theory:

- Signal (data) compression limit

- Ultimate transmission rate over a noisy channel (for reliable communication)

Every morning one reads newspaper to receive information. A message is said to convey information, if two key elements are present in it.

- Change in knowledge

- Uncertainty (unpredictability)

Information theory talks about:

- How to measure the amount of information?

- How to measure the correctness of information?

- What to do if information gets corrupted by errors?

- How much memory does it require to store information?

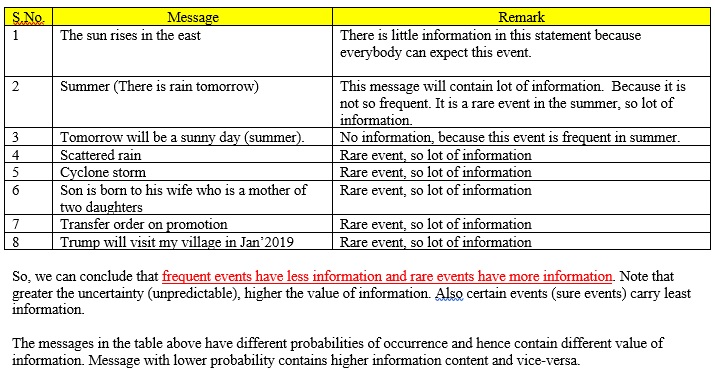

Amount of information contained in a message is inter-related to its probability of occurrence. News items already known convey no information. Information is always about something (occurrence of an event etc.). It may be a true or lie.

Consider the following messages:

- The sun rises in the east.

- Tomorrow will be a sunny day (summer).

- Scattered rain.

- Cyclone storm.

- Son is born to his wife who is a mother of two daughters.

- Transfer order on promotion.

- Trump will visit Hosaana in Jan’2017.

By intuition, understand that the above 7 messages carry different information.